Quote: [Google Analytics] “Content Experiments sucks and I will never use it for any of my clients….run away“

The above snippet came from a post by Michael Whitaker (smart thinker, worth following) who asked for feedback on comments made by Chris Goward at the Imagine 2013 conference. My initial response was “hmmm – poor comments indeed. Whether you like a G product or not, to say that Google’s stats methods are unreliable, or reporting doesn’t work really is silly and lacks credibility.”

I am actually no big fan of the Google Analytics Content Experiments either, but I wish to put my views into context…

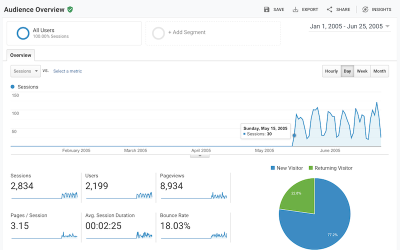

Firstly, lets put to bed any comments about reliability, or reports not working. As any Google Analytics user knows, reliability is very, very good.

So what is the issue with Content Experiments…?

A number of people have commented on Google’s use of the multi-armed bandit model that content experiments use. In a nutshell this statistical algorithm allocates more traffic to a winning test combination over time, at the expense of a losing combination. That makes sense, right – particularly if you are an e-commerce site where money is being lost if a poorly performing test page is delivered. After all, why would you want your test traffic levels to remain static when there is a clear winner?

The multi-armed bandit model is clever and unique in the industry…

However, in the early days of Content Experiments the algorithm was too aggressive. That is, an unexpected peak in conversions would cause traffic to be reallocated at such a rate that it could not be rebalanced if that condition subsequently changed. For example, a successful campaign for a specific product that also contained other products on its landing page, would skewer the conversion rates for the other products. Another example is a weather change. If you sell both umbrellas and t-shirts on a page under test and the weather turns wet for a few days, your umbrellas would be declared the winner (i.e. more sales for that period) without the algorithm waiting for the weather to change back. And there a national holidays – one off events that mess with your data, and so on…

So, aggressive bandits have historically been a problem…!

However, this was fixed (pacified) early in 2013. Also, there is now the option to turn off this feature all together if you wish – allowing you to keep the traffic allocation of test alternatives fixed. So as a methodology, Google Analytics Content Experiments are perfectly good for A/B testing (note, multi-variate experiments no longer supported).

As I have said, I am not a fan of Content Experiments. Here’s my explanation why…

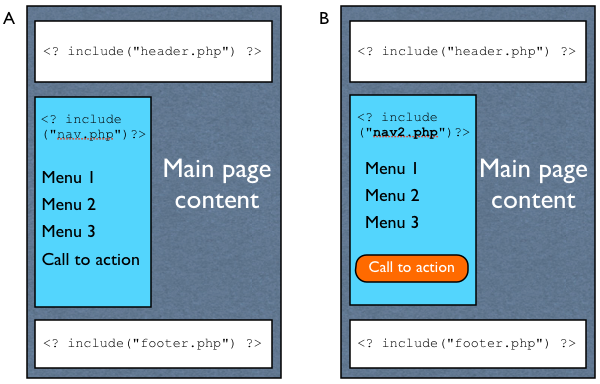

In terms of setting up and administering an experiment, Google Content Experiments really are clunky and unwieldy. This is because test pages are delivered form your server. That means its a headache for a page built from multiple template components as most commercial sites are – see Figure 1. For example, a page is usually built of multiple “include” statements such as:

- for the top of page branding

- for the top navigation bar

- the side navigation, main body content

- footer etc.

Hence, in order to conduct a Content Experiment test, you would have to go to your web development team and ask them to build a page delivery system that can process variations of the above list i.e. swap out 1 for 1b, keep 2-3 in place; swap out 1 for 1c, keep 2-3 in place etc.

Figure 1 – An example A/B test page using Content Experiments requiring a separate ‘nav2.php’ page to be displayed and a delivery system to ensure that it is called correctly.

Figure 1 illustrates a test for a change to a side navigation menu. Using Content Experiments this requires the side navigation include file to be switched (nav.php or nav2.php) before the page loads. The other components are added as normal. So, if your landing page URL is currently www.mysite.com/productabc/, your web platform needs to use separate URLs for test variations e.g. www.mysite.com/productabc/?sidemenu=1 and www.mysite.com/productabc/?sidemenu=2. And your platform needs to know what to do with the extra parameter (sidemenu).

Basically, unless you are the web dev agency/team, a request to change the entire system that delivers your web content by this method is not going to happen in any reasonable time frame (if ever)…

Here’s my rule for choosing a testing tool:

Once you have built your hypothesis and decided what you wish to change on your page(s), the creation of an A/B experiment should take under 1 hour. Choose your tool based on that estimate….

An alternative approach

Tools such as Optimizely and Visual Website Optimizer work in a different way. The result is that experiments such as Figure 1 are possible and doable in a matter of minutes – without the need to change your website delivery system! Their technique is to modify the content of your page ‘on-the-fly’ by manipulating your page content with javascript and css. That means that variations can be built within their admin interface. The downside of this method, is that there is a slight delay added to your page loading. This happens while the test script figures out the combination requested and then alters the content on-the-fly as your page loads.

In my experience, the benefit of a much easier experiment setup far outweighs any page loading delay. So Optimizely and Visual Website Optimizer have the edge. However, as you can see it also has its downside and some clients will not tolerate any delay in page loading – a fair point.

I would love to hear your thoughts on the merits or otherwise of Content Experiments.

client side experiments are possible with google analytics: https://developers.google.com/analytics/solutions/experiments-client-side

@Andrej – yes possible (and Pete does an excellent job describing the steps). However my point with this post is about how easy it is to build and manage experiments. Client-side technologies give the opportunity for a drag-and-drop interface for re-organising/changing page content that is great for users working through the challenges of testing. Content Experiments do not have this ability, even with Pete’s work around. My view was that CE needs a fundamental rethink – which has now happened with the new Analytics 360 Suite announcement 🙂

Hi!

I’m agree with some points in this article. Google Analytics Content Experiment is to weildy. And it’s not so easy to implement A/B tests with this service. Especially if you are digital marketer and your developers can’t make new versions of website in a short timeframe.

That’s why we have developed interesting A/B testing solution on top of Google Content Experiment – Changeagain.me.

It also has full integration with GA, so you shouldn’t create new goals and can make really deep post-test analysis.

But the main differentiation from Content Experiment is easy-to-use visual editor. It allows to create A/B tests within 5 minuties without any coding skills and support from development department. Also we added some additional features that are available in Optimizely, vwo and some other commercial A/B testing tool.

So, check it out if you are interested in very easy and strong tool for A/B testing with Google Analytics integration.

Does anybody have experience in wordpress using this plugin? http://wordpress.org/plugins/google-content-experiments/developers/ it has good reviews but I was just wondering if it was extremely basic or if it actually does a good job of testing different pages as well as sidebars, header navs, etc.

@Hugh I’m not sure if that was meant as a sardonic comment, but I’ll take it at face value.

I’d say, “Congratulations!”

I’ve never said it’s impossible to get good results with CE. Of course it is. It’s possible to achieve results with plenty of inferior tools. There are people who’ve even had great results using AdWords to alternate landing pages. That certainly doesn’t mean I’d recommend it.

And I’d say, if CE works for you, that’s great. Keep going with it! As long as you’re using something to test and you know how to interpret the results.

Hi Chris,

out of interest what would you say to people who have achieved lasting results using CE?

Hugh

Hi guys. First, thanks for watching! I just stumbled across this blog post now.

To answer your question, when I said “unreliable”, I was referring to the multi armed bandit algo.

Yes, there’s a way to turn it off now, but for the GA target audience looking for a free tool, most won’t understand how or why to do that, making their results for them unreliable. I’m talking from a business perspective, not from a statistical perspective. Most people looking for results look to the tool’s report to make a business decision and, too often, the results will show a “loser” variation that really didn’t have a chance.

For anyone more sophisticated in testing than the “free” audience, the other limitations of the tool that you mention, Brian, will be too great to use it. That’s why I can’t recommend it.

As far as I know, Google stopped investing in developing it long ago, and it shows. The rest of GA is awesome, but CE lacks the basics to be useful.

As one of the original GWO WOACs, we held out the longest promoting it until G killed it. Unfortunately, the replacement was inferior and there are so many better tools on the market now, it’s unsupportable.

@Jacques – agreed. I explain it to clients as:

Testing is not something you sprinkle on top of your website. Its a continuous learning process. Much the same way that ensuring you have good quality data (or traffic) is.

@Jacob – Being in charge of all things digital makes Content Experiments viable for you. That said, I run testing on this site (also WordPress) and CE was just too clunky and time consuming to be able to setup i.e. test menu bars and layout. I would be interested in your experiences.

@Hugh – Free is certainly an advantage. However bear in mind that Optimizely starts at $20 per month! I am not affiliated with them (other than I know one of the founders), but in my view anything less than $100/month is effectively free i.e. my time is worth more than that…

Here’s my general rule for guidance:

Once you have built your hypothesis and decided what you wish to change on your page(s), the creation of an A/B experiment should take under 1 hour. Choose your tool based on that estimate….

Hi Brian,

I think both Jacques and Jacob make excellent points, one thing that GCE has going for it over the others is that it’s free. I don’t think that can be underestimated.

As Jacques says, testing uptake has been surprisingly slow but I think that one of the most compelling arguments for testing using GCE is that it costs less so even if the test doesn’t go your way you as the stakeholder will know that your financial liability will be less; in other words the potentially awkward post test conversation about what was learnt won’t include such a big bit on how it was all a bit of a wast of money (notwithstanding cost of time to implement).

I also think that from a pragmatic point of view the fact that GCE lives right next door to the kind of data that plays a significant part in stimulating an experiment is a benefit. So in this other respect [direct] access to the testing tool is also a help.

Those aren’t really the best reasons for choosing GCE over is competitors but we’re all human and I think they have some bearing especially for first time or beginner testers.

I work for a small business that’s going to start using CE.

We’re undergoing a complete WordPress site rebuild and redesign, and we’ve let the web developer know in advance that we’re going to use CE. This means the whole site has been designed to make it as easy as possible to create duplicate versions of a page with a given variation.

Because I’ll be responsible for the whole process of testing – from coming up with the original ideas, to creating the tests (commissioning developers/designers as needed) and monitoring the results – CE should work for me.

Also, as a small business, the fact that CE is free gives it a significant advantage over its competitors. And given the relatively low traffic of our site, a tool that does A/B splits well is all I need.

We’ll have to see if CE proves to work well for me in practice. I do know that I’m looking forward to doing some testing that is part of the GA interface I’m already familiar with. I’m of the view that if I decide I want something more advanced (or if having multiple URLs becomes too tricky) I will at least be in a better position to judge what exactly it is I want out of a testing tool.

What also amazes me is the overall, let’s use the word, *failure* of testing in digital analytics. In 2005, I was the Offermatica’s exclusive Canadian partner; I explained then to my bosses that within the next year, MVT would be all the rage, because it made so much sense as a natural evolution of web analytics, since the latter was all about the optimization paradigm. It never took off.

Years later, Google abandoned their Web Site Optimizer to keep only the A/B component, and still, very few companies use it.

I think lots of them realized, as the people I pitched back in 2005, that MVT is a darn hard thing to do, and involves tons of resources to produce all those versions/recipes/scenarios you’re supposed to test. I think testing is just too hard for lazy marketers who would rather get lucky and make a quick buck, instead of deeply understanding what really works. But in their defense, once you took the testing road, you can’t stop, mainly because of gains half-lives, and unless you really gear up for the task, it is just too complicated to do…

Hi Brian,

Nice post, well balanced as always but I think there is more to cover in terms of the client side API and the server side API.

The client side API is a big (but not the final) step towards Optimizely/VWO style visual testing functionality. Technical knowledge is still required BUT you can see where the product is going and testing without redirects is, as you describe, far more preferable to clunky and hard to implement redirect based tests.

So, to keep the dialogue flowing, do you have any thoughts on the SERVER-side API?

Are you going to the GACP Summit? Do you think we’ll see any announcements regarding GACE?

Regards

Doug

@Doug – I think the Content Experiments feature of GA is lacking love at the moment (i.e. resources) as development has been glacial. It will be surprising to hear if anything is happening with CE in the near future.

To answer your question on server-side api, until (or even if), CMS platforms have this baked into their feature set, I don’t see this as a viable solution. Its kind of the same situation server-side analytics found itself in 5 years ago. That is, its a nice idea and has some advantages, but in reality client-side is likely to dominate…

BTW, I will at the summit this year (my 9th…). See you there 🙂