Keeping a local copy of your Google Analytics data can be very useful for your organisation. For example, for third party data audits, reprocessing data, troubleshooting purposes, and for viewing data longer than 25 months (Google’s current data retention commitment). Having a local copy of the collected data allows you achieve all these and protects you from the accidental or malicious deletion of your GA account and profiles.

If you are looking for an outsourced backup service for Google Analytics, contact GA-Experts.com, who can do this all for you…

[This post updated: 04-Aug-2009]

Benefits of keeping a local copy of Google Analytics visitor data

What you can do with your local copy of your data:

- Greater control over your data e.g. for third-party audits

- Troubleshoot Google Analytics implementation issues

- Process historical data as far back as you wish – using Urchin

- Re-process data when you wish – using Urchin

Use the following 2-step guide to keep a local copy; 1) modify your Google Analytics Tracking Code – the GATC; 2) add a small transparent gif file to your web root.

1. Modifying your GATC

If you are using urchin.js in your Google Analytics tracking, then you should upgrade! However, if that is not possible just yet, add the following line immediately before urchinTracker(); as follows:

For urchin.js users:

var _userv=2;

For ga.js users, add the following line immediately before pageTracker._trackPageview(); as follows:

pageTracker._setLocalRemoteServerMode();

For ga.js async users, add the following line immediately before _gaq.push(['_trackPageview']); as follows:

_gaq.push(['_setLocalRemoteServerMode']);

2. Add _utm.gif to your web server root

The consequence of step 1, is the request for a file named __utm.gif from your web server as your page is loaded. This is simply a 1×1 pixel transparent image that Urchin uses for processing. In fact, it is the only line in your logfile that Urchin uses (apart from error pages and robot activity. You can create the file for yourself or use this one (use right click, save file as) __utm.gif file. Upload this in your document root i.e. where your home page resides.

That’s it! With these two simple steps, Google Analytics visitor data is simultaneously streamed to your web server logfiles in addition to being sent to Google Analytics for processing. This is simple to achieve as all web servers log their activity by default, usually in plain text format.

Once implemented, open your logfiles to verify the presence of additional __utm.gif entries that correspond to the visit data as ‘seen’ by Google Analytics.

A typical Apache logfile line entry (line wrapped here) looks like this:

86.138.209.96 www.mysite.com - [01/Oct/2007:03:34:02 +0100] "GET /__utm.gif?utmwv=1&utmt=var&utmn=

2108116629 HTTP/1.1" 200 35 "http://www.mysite.com/pageX.htm" "Mozilla/4.0 (compatible; MSIE 6.0;

Windows NT 5.1; SV1; .NET CLR 1.1.4322)" "__utma=1.117971038.1175394730.1175394730.1175394730.1;

__utmb=1; __utmc=1; __utmz=1.1175394730.1.1.utmcid=23|utmgclid=CP-Bssq-oIsCFQMrlAodeUThgA|

utmccn=(not+set)|utmcmd=(not+set)|utmctr=looking+for+site; __utmv=1.Section One"[GA-Experts.co.uk has a best practice guide on configuring an Apache logfile format ]

For Microsoft IIS, the format (line wrapped) can be as follows:

2007-10-01 01:56:56 68.222.73.77 - - GET /__utm.gif utmn=1395285084&utmsr=1280x1024&utmsa=1280x960

&utmsc=32-bit&utmbs=1280x809&utmul=en-us&utmje=1&utmce=1&utmtz=-0500&utmjv=1.3&utmcn=1&utmr

=http://www.yoursite.com/s/s.dll?spage=search%2Fresultshome1.htm&startdate=01%2F01%2F2010&

man=1&num=10&SearchType=web&string=looking+for+mysite.com&imageField.x=12&imageField.y=6&utmp

=/ 200 878 853 93 - - Mozilla/4.0+(compatible;+MSIE+6.0;+Windows+NT+5.1;+SV1;+.NET+CLR+1.0.3705;

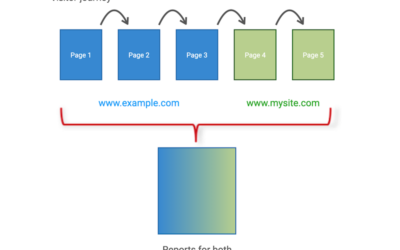

+Media+Center+PC+3.1;+.NET+CLR+1.1.4322) - http://www.yoursite.com/In both examples, the augmented information applied by the GATC is the addition of utmX name value pairs. This is known as HYBRID data collection – the benefits of which are discussed in the post Software v Page Tags v HYBRIDS .

The benefits explained in detail

1. Greater control over your data

Some organisations simply feel more comfortable having their data sitting physically within their premises and are prepared to invest in the IT resources to do so. Of course you can not simply run this data through an alternative web analytics vendor, as the GATC page tag information will be meaningless to anyone else. However, it does give you the option of passing your data to a 3rd party auditing service such as ABCE . Audit companies are used to verify web site visitor numbers – useful for content publishing sites that sell advertising and therefore wish to validate their rate cards.

NOTE : Be aware that when doing this, protecting end-user privacy (your visitors) is your responsibility and you should be transparent about this in your privacy policy.

2. Troubleshoot Google Analytics implementation issues

A local copy of GA visit data is very useful for troubleshooting Google Analytics installations. This is possible as the logfile entries show each pageview captured in near real-time. So you can trace if you have implemented tracking correctly – particularly non-standard tracking such as PDF, EXE or other download files types.

3. Process historical data as far back as you wish – using Urchin

As mentioned earlier, Google Analytics currently stores reports for up to 25 months*. If you wanted to keep reports for longer, you could purchase Urchin software and process your local data as far back as you wish. The downloadable software version runs on a local server and processes web server logfiles including HYBRIDS. Although not as feature rich as GA, Urchin does allow you to view historical data over any time period you have data for, as well as providing complimentary information to GA .

For further information on Urchin software, view the post What is Urchin?

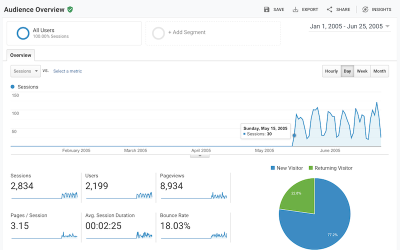

[*Google have made no attempt to remove data older than 25 months as the screenshot below shows.]

NOTE : Reports from Urchin will not align 100% with reports from Google Analytics as these are two different data collection techniques. For example a logfile solution tracks if a download completes, while a page tag solution only tracks the onclick event – these are not always going to be the same thing. Read the accuracy whitepaper for a detailed discussion on accuracy issues effecting web analytics.

4. Re-process data when you wish – using Urchin

With data and the web analytics tool under your control, you can apply filters and process data retroactively. For example, say you wished to create a separate profile just to report on blog visitors. This is typically done by applying a page level filter – that is, include all pageview data from the /blog directory. For Google Analytics, reports will be populated as soon as that profile filter is applied i.e. from that point forward. For Urchin software, you can also reprocess older data to view the blog reports historically.

The disadvantage of keeping a local copy of visitor data

These aren’t so much a disadvantage, but are serious overhead considerations:

- Maintaining your own logfiles has an IT responsibility. Web server logfiles get very large, very quickly. As a guide, every 1,000 visits produces approximately 4Mb of log info. So 10,000 visits per month, is ~500Mb per year. If you have 100,000 visits per month that’s 5GB per year and so on. Those are just estimates – for your own site, these could easily be double the estimate.In addition to the collected data, Urchin also requires disk space for its processed data (stored in a mysql database). Though this will always be a smaller size than the raw collected data, storing and archiving all this data is an important task because if you run out of disk space, files and databases start to over write each other. Corrupting files in this way is almost impossible to recover from.

- If you maintain your own visitor data logfiles, the security of collected information (your visitors) and the privacy of it, becomes your responsibility.

Did you find this post useful? Please share your thoughts via a comment.

BTW, take a look at AngelFish as a possible replacement to Urchin:

http://analytics.angelfishstats.com/

Hi Brian

I am interested in keeping a local copy of GA data for a start up I am working with. Will this work if I just have GA or do I need to have purchased the Urchin product also?

Essentially I want pageviews stamped with visitor ID, visit ID and timestamp. (I can get through API using custom variables but raw files are preferred for a number of reasons). If the method above doesnt work with standalone GA, I guess that we could write some code to read the GA cookie and create these log files. That might be preferred because as far as I know this is not a widely used function and maybe there is a risk it would be deprecated?

Many thanks for your insight. Love to meet in person if you find yourself in Ireland!

Best wishes

Niamh

@Niamh – this method is not applicable any more as Urchin sales have been discontinued. Essentially saving a local copy of the data is a bit useless if there is no tool to analyse it.

BTW, be careful with any kind of visitor ID labelling using GA. It is against the Terms of Service to collect such data – even if anonymous. That is, all GA reporting is at the aggregate level.

With this, does the data get replicated on the usual GA server AND your local server, or is it only recorded on your local server but not on GA server?

*-* This is really too complicated for me, but I’ll try to follow this blog…analytics are simple the most important tool for promoting my business.

Hi Brian, i m using GA data for generating web traffic reports but its talking too long to get data from GA. so i want it to store it locally in my database. is there any way that i can store it like this? and i can run cron to fetch data overnight?

Heena: For storing and/backup of your Google Analytics data, please see:

http://www.advanced-web-metrics.com/blog/2007/10/17/backup-your-ga-data-locally/

Cool! This helps. Thanks Brian

Kiran: In Urchin, setting the method of log file processing to UTM means that it will only process these lines for pageview data. Errors and file downloads will also be reported on.

Event Tracking (not publically released) is a Google Analytics only feature at present.

This is really cool!

I have a question. May sound a bit stupid.

How do I re-create the stats using the “utm.gif”ed log file and Urchin? As the log file will have extra lines (or calls) to utm.gif in addition to usual weblog lines, how do I tell Urchin to use utm lines alone? Also will I be able to backup the event-tracking related stuff using this approach?

Sergio: You can’t backup data what you were not streaming to your logfiles at the time. However if you are logging your web server activity you could process it through Urchin: http://www.advanced-web-metrics.com/blog/2007/10/16/what-is-urchin/

This would only be achieved by identifying visits by their ip address+user-agent identifier, which is notoriously inaccurate, but it could at least give you an idea. Moving forward you should use the HYBRID method for greater accuracy.

Nathanial: I am in the process of updating my previous posts for the new ga.js implementation. The equivalent ga.js variable setting is:

_setLocalRemoteServerMode()

The migration guide can help you with this:

http://www.google.com/support/analytics/bin/answer.py?hl=en&answer=76305

Hi Brian,

Interesting idea. I notice that this is uses the old version of the GA code. Has this been tested with the latest version?

Nathaniel

Hi Brian, excellent article!

Do you know any possibility to backup your GA previous acquired data?

Now this is interesting – I didn’t know you could do this. Off now to do this and see how it works in practice – and If I can usefully analyse it using something other than urchin 🙂