![]()

![]()

![]()

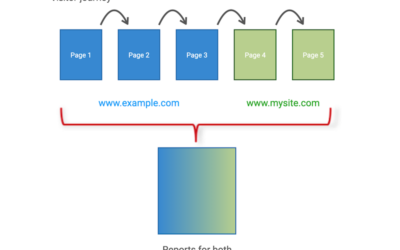

Last year I wrote an whitepaper on web analytics accuracy. The intention of this was to be a reference guide to all the accuracy issues that on-site web measurement tools face, and how you can mitigate the error bars. Apart from updating the article recently, I wanted to illustrate how close (or not) different vendor tools on the same website can be when it comes to counting the basics – visits, pageviews, time on site and visitors.

To do this, I have looked at two very different web sites with two tools collecting web visitor data side by side:

- Site A – This blog, running Google Analytics and Yahoo Web Analytics. According to Google, there are 188 pages in the Google Index and traffic is approximately 10,000 visits/month

- Site B – A retail site that runs Nielsen SiteCensus and Google Analytics (site to remain anonymous). According to Google, there 12,808 pages in the Google Index and traffic is approximately 1 million visits/month

These are obviously two very different web sites with two different objectives…

Site A is relatively small in content and visit volume, with the purpose of driving engagement i.e. readership and their interaction via comments, ratings, click throughs etc. For this site, I had complete control of the deployment of both web analytics tools. This enabled me to have a best practice deployment of both Google Analytics and Yahoo Web Analytics.

Site B is approximately 100x larger in terms of content and traffic. Its main objective is to drive sales via online transactions. For this site, I had complete control of the Google Analytics implementation, with no control of the SiteCensus implementation as this was before my time. However, as the tool was professionally installed, I am assuming it was a best practice deployment.

Both tools use first party cookies for visitor tracking.

Results

I analysed the reports for August, September, October 2008 and took the average of the reported differences between the two tools for the following 5 metrics:

- Visits – also known as the number of sessions

- Pageviews – also known as the number of page impressions

- Pages/visit – the average number of pageviews per visit

- Time on site – the average time a visit lasts

- Unique visitors

Comparing each of the three months separately was preferred in order to mitigate any outliers of simply comparing a longer 90 day period. A monthly interval also reduced the effects of cookie deletion for tracking unique visitors – the longer the time period, the greater the chance the original ID cookie being lost or deleted and therefore the inflation of unique visitors. The approach was validated by selecting weekly comparisons at random. These showed almost identical differences as the monthly comparisons. The results are shown in Tables 1 and 2.

| Table 1: | versus |

| August | September | October | ||

|

Google Analytics

Nielsen SiteCensus

|

Google Analytics

Nielsen SiteCensus

|

Google Analytics

Nielsen SiteCensus

|

Average difference

|

|

| Visits | 1.18 | 1.09 | 1.08 | +11.7% |

| Pageviews | 1.22 | 1.10 | 1.11 | +14.3% |

| Pages/visit | 1.03 | 1.02 | 1.02 | +2.3% |

| Time on site | 1.13 | 1.12 | 1.12 | +12.3% |

| Unique visitors | 1.09 | 1.02 | 1.02 | +4.3% |

| Table 2: | versus |

|

Google Analytics

Yahoo Web Analytics

|

Google Analytics

Yahoo Web Analytics

|

Google Analytics

Yahoo Web Analytics

|

Average difference

|

|

| Visits | 0.95 | 0.95 | 0.98 | -4.0% |

| Pageviews | 1.07 | 1.07 | 1.11 | +8.3% |

| Pages/visit | 1.12 | 1.12 | 1.13 | +12.3% |

| Time on site | 1.25 | 1.46 | 1.18 | +29.7% |

| Unique visitors | 0.95 | 0.96 | 0.99 | -3.7% |

Observations & Comments

For both implementations of web analytics there is a relatively low variance (spread of values) in the reported metrics.

Site A

- Google Analytics reported slightly higher numbers than Nielsen SiteCensus for all 5 metrics. The largest difference for any one month is a 22% higher pageview count as measured by Google Analytics.

- On further investigation, it was discovered that page tagging of SiteCensus was not 100% complete. That is, some pages were missing the data collection tags for SiteCensus*. This was due to the use of multiple content management systems subsequent to the initial implementation. Therefore metrics would be under counted by SiteCensus compared with the more complete (and verified) Google Analytics deployment.*It was estimated that approximately 6% of pages were missing the SiteCensus page tag, though how many monthly pageviews this related to is unknown. The checking of page tags was achieved using the methods discussed in Troubleshooting Tools for Web Analytics.

Site B

- Google Analytics reported slightly lower numbers than Yahoo Web Analytics for the number of visits and pageviews and slightly higher numbers for the other metrics. The variance is also low, except for the time on site, which appears significantly higher when reported by Google Analytics – as much as 46% higher than for Yahoo Web Analytics.

- As both tools where verified as having a best practice deployment of page tags, the conclusion was that the large differences for the time on site must be due to the different ways the respective tools calculate this metric.For Yahoo Web Analytics, the time on site is calculated as: time of last pageview – time of first pageviewFor Google Analytics, the time on site is calculated as: time of last hit – time of first hit

A Google Analytics “hit” is the information broadcast by the tracking code page tag. This can be any of the following: a pageview, a transaction item, the setting of a custom variable, an event (e.g. file download), or the clicking of an outbound link.

For Site B, custom variables, PDF file downloads and outbound links are all tracked with Google Analytics and are significant proportions of visitor activity. These were not being tracked with Yahoo Web Analytics. In particular, PDF file downloads are thought to have the highest impact on the calculated time on site, as a visitor is likely to pause to read these before continuing to browse the web site. File downloads are therefore likely to account for the larger discrepancies of this metric.

Conclusions

The methodology of page tagging with JavaScript in order to collect visit data has now been well established over the past 8 years or so. Given a best practice deployment of Google Analytics, Nielsen SiteCensus or Yahoo Web Analytics, high level metrics remain comparable. That is, can be expected to lie between 10-20% of each other. This is surprisingly close given the plethora of accuracy assumptions that need to considered when comparing different web analytics tools.

As tracking becomes more detailed – for example the tracking of transactions, custom variables, events and outbound links, the greater the discrepancies of metrics will be between the web analytics tools.

Extrapolating this study to all page tag web analytics tools using first party cookies (if not first party cookies, why not?), high level metrics from different web analytics tools should be comparable. The large caveat is having the knowledge of whether a deployment/implementation of the tool follows best practice recommendations. Often this is the greatest limitation, which is why so few comparison studies are available.

Comment

Accuracy in the web analytics world is still a hot debate, though for the wrong reasons in my view.

For a business analyst, reporting a 10-20% error bar when comparing two measurement tools may sound a large. However it pails into insignificance when compared to the vagaries of tracking offline marketing metrics such has newspaper readership figures, or TV viewing data. This is especially so considering the link between readership/viewing figures and any actual “engagement” with the associated advertising is so tenuous.

Are you loosing the battle with marketing teams obsessed with “uniques” or winning the war of tracking trends and KPIs? I would be interested in your comments.

Hi Brian,

Here from Occam’s Razor.

Thanks again for the link. This is a very useful comparison chart.

Yulia

I’m posting here because of an experience we are having at the moment, causing our company to investigate the accuracy of the various analytical tools.

Our group has a number of international divisions, however a UK product company has an Enterprise Risk Management product it has been promoting online.

It purchased banner ads with a major site that carries much of the key news in the sector, along with sector specific jobs etc, and has a reported monthly unique user base in 5 figures.

During the first month of the campaign we received only 6 hits from this site – which really suprised us, as our Ad campaign on Google PPC was light years ahead of this.

We contacted the ad site vendor and addressed this with him, explaining that we were suprised to only have 6 hits recored on Google Analytics coming through from his site. He expressed suprise as his data shows almost 300 click throughs on the banner to us.

As you can see this is confusing. We’re not expecting thousands and millions of clicks as ERM software is not that interesting and we accept that not that many people want risk software, but the core issue here is how to explain the variance between the Ad Sites figures and ours?

The click through rate they are reporting is 40-50 times what we are actually capturing on Google Analytics. The above article talks about a 10-20% variance, but we are waaay outside of this.

Is there any reasonable explanation any of you might have for this that we might be overlooking?

Dee: This sounds very much like an implementation issue. The following whitepaper (updated just last week!) details pretty much everything related to accuracy. Its also vendor agnostic:

http://www.advanced-web-metrics.com/understanding-accuracy

Brian: The ranges of discrepancy between both tools are similar than the one’s you specified for Yahoo Web Analytics. Also the group of pages considered for the evaluation are verified to have both tracking codes.

Considering that GA code is included just right after SiteCatalyst code, simple logic will tell us that for a high traffic website, GA should report less number of PVs. However it is consistently the opposite.

That’s why I was wondering which can the reason behind this discrepancy, and confirm if differences between the tools themselves can be the cause.

Thanks,

Jose

Jose – even if the page tags could theoretically execute as exactly the same time there will aways be differences in metrics. The following whitepaper (updated just last week!) details pretty much everything related to accuracy. Its also vendor agnostic:

http://www.advanced-web-metrics.com/understanding-accuracy

Jose: How large a descrepancy are you seeing? Assuming a best practice implementation of both tools (that is a very large assumption!) I would be surprised if the differences are greater than the ones I show here.

Pageview metrics are pretty standard in terms of definition and methodology so I doubt this would account for a large difference. If the difference is large, please ping over the URL of a site in question and I’ll take a quick look.

Hi

This is indeed a very interesting post. I’ve been looking for this kind of comparisons for a while.

It happens I have a comparison of my own. I’ve noticed that in general the number of Page Views tracked by Google Analytics is consistently higher than the one reported by Omniture SiteCatalyst for a group of pages tagged with both tracking codes. I have seen this pattern in multiple websites, even when the GA tracking code is placed after the one of SiteCatalyst.

I wonder why is this happening?

– Is it because of any major technology difference used by the two tools? Which is the difference?

– Is GA making an asynchronous call while SiteCatalyst is waiting for the page to load completely or waiting from the tracking server to reply before counting the page view?

– Or is it only because differences in the definition Google and Omniture have of a Page View? In this case which are the differences?

Thanks,

Jose

Gerry: Your comments brought a smile to my face – we live in similar worlds! Throughout the post I assume a best practice install of the tools (I should edit/clarify this). This is why I used my own site as one example, as I have complete control over the server and the HTML.

Providing a best practise implementation of GA is actually how my business makes its money. With confidence in the data, you can really go places with experimenting and optimising.

I find the product is considered good enough that clients wish to invest in its understanding. Once that is in place, implementation project follow which leads onto consulting and myself acting as the agent of change (Jez – I sound like Obama!). Anyhow its a great business model – collect and report data for free, invest in the knowledge and the know-how…

Neo/Web Management: I sympathize with you. It really does surprise me why some people have so much faith in Alexa when the accuracy debate with “similar”, though much more advanced off-site metrics providers, is raging so much – view Google search results for comments around comScore, Nielsen NetRatings, Hitwise, Compete etc. Look out for an article on this from me in the near future…

For Alexa, the sample size is small (I have never met anyone with the Alexa toolbar installed), very US centric (though maybe that suits you), and heavily biased towards the webmaster community (hardly your average web user).

Good luck with your endeavours, though I hope you find more enlightened clients…

yeop i too have seen that some people ask for alexa rankings while some relate to google

The problems I find is that the tools are only as good as the implementation, and most implementations have fundamental flaws, completed by IT people who don’t care, used by marketing people who think its 100% accurate and then abused by management who think in KPI land (conversion up? excellent – we just switched off the forum as forum users converted at a lower rate, even though they were practically free and most at somepoint would convert … )

You are right Brian,most of the people in our industry are facing these type of problems. One of my client believes in “Alexa” and its rankings. Though google analytics shows good results of his site and he is getting good business, he thinks that we are not working hard because Alexa rank is not so good.

Steve: Good questions. I saw the same level of discrepancies when segmenting geographically by country or by browser type.

The accuracy whitepaper referenced in the post explains the reasons for these. Essentially, the key to successfully using multiple web analytics tools is not to use them to measure the same metrics i.e. use them to compliment each other. That said, if you are comparing the same metrics, then the trends will be accurate.

For example, if you notice a 10% increase in search engine traffic week on week in one tool, then this should also show in other(s) tools, regardless of the absolute numbers. If you don’t see this, then it suggests one or more of the implementations is weak.

HTH, Brian

Brian, any thoughts on *why* the discrepancies?

Recognising the inherent woollyness in any such answer. 🙂

Clearly there are plenty of possible issues – not least being the un-tagged pages. I’m wondering if you have/can do any simple slice and dicing to possibly show why the discrepancies. ISP, Country, Browser, OS etc.

I agree that a 10-20% difference isn’t much compared with TV Viewing etc; but it’s a huge difference in what are fundamentally identical data collection methods. OK, so my logs bias comes through again. 😉

My thinking, is that if a particular group show up as being an issue, that can lead to possible solutions to fix this. Or not.

What’d be a cool trick to trial further: is if an otherwise identical website, session-load balanced across multiple servers. Each server separately registered under a different GA account. See if GA is +/- 1% or worse of itself.

Not so much compare the tools, but compare the accuracy within a given tool. I’d *expect* close to 1-3% difference. But … is that in fact so? 🙂

Cheers!

– Steve

Roman: That’s a great good point – very sloppy of me (fat fingers!). I have clarified in the post that its pageviews/visit.

Thanks for the feedback.

Hi Brian, were you comparing Pageviews/Visit or Pages/Visitor? This is different in bullets and tables with results ;-).

I like this comparsion. It shows where we should be looking at if we want to compare tracking tools.

Thanks!